The epiphany didn’t come from a breakthrough in model architecture or a surge in accuracy metrics. It came from watching a physiotherapist from Lviv stare blankly at a screen.

Dmytro Grybeniuk and Oleh Ivchenko, specialists in machine learning infrastructure, were attending the MedAI hackathon at Odesa National Polytechnic University not as competitors, but as judges. Yet, as reported by local Ukrainian media, the event turned into a brutal mirror for their own work.

They watched as the physiotherapist tried to interpret the “explainability” feature of a diagnostic prototype a large, indistinct heatmap overlaid on an image. It was technically impressive, but clinically useless

“My partner Oleh Ivchenko just looked at me and said, ‘We’re building for ourselves again,’” Grybeniuk told reporters in Odesa following the event.

This moment of reckoning highlights a pervasive crisis in the booming field of medical artificial intelligence. While venture capital pours billions into algorithms capable of detecting disease with superhuman accuracy, deployment in real-world clinics remains stalled by a fundamental barrier: trust. The question doctors were asking at the hackathon wasn’t “How accurate is it?” It was simpler and much harder to answer: “How do I know it’s working for the right reason?”

Following the event, Grybeniuk and Ivchenko scrapped months of work on their own framework, ScanLab, to address the gap between engineering intent and clinical reality. Their pivot offers a window into the necessary maturation of an industry moving from experimental hype to regulated reality.

Beyond the Heatmap

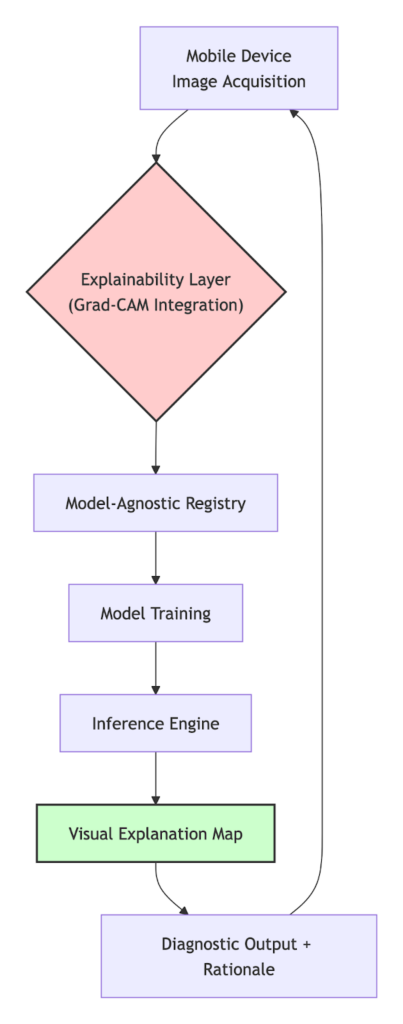

For years, the industry standard for “trust” has been explainability often delivered via Grad-CAM heatmaps, which highlight the pixels an AI focused on to make a prediction. The Odesa hackathon proved that these vague colorful blobs are insufficient for a busy clinician making life-altering decisions

Ivchenko re-engineered their system’s output layer. Instead of just showing where the model looked, the system now runs a secondary analysis on the heatmap itself to articulate what it saw in plain, clinical terms.

Where a previous model might simply output “Cancer: 98%,” their revised system is forced to provide a rationale: “Focal asymmetry in upper right quadrant, correlating with microcalcification cluster pattern.”

Furthermore, they addressed the practical friction of using AI in a hospital workflow. Observing that requiring doctors to upload scans to the cloud was a non-starter, they inverted the workflow to focus on “edge inference.” The entire analysis now happens on a mobile device in under a second, allowing a doctor to point a phone at an X-ray on a lightbox and see the rationale instantly, eliminating cloud latency and data privacy concerns.

The Era of “Forensic MLOps”

The changes, however, go deeper than user interface. They reflect a shift in anticipation of a tighter regulatory environment, such as the looming EU AI Act.

The engineers argue that the term “explainable” is too soft for medicine. “It implies a suggestion,” Ivchenko said.

Instead, their focus for 2026 is on creating an immutable “audit trail.” They are developing what Ivchenko calls “forensic MLOps” (Machine Learning Operations).

The goal is to ensure that every single inference generated by the AI creates a permanent, traceable log. This log would include the exact model version, checksums of the specific data slice it was trained on, and how its performance differs from previous iterations.

If a doctor disputes an AI’s finding, they need a technical pathway to challenge the “black box.”

“A doctor needs to know not just why the AI said tumor,” Grybeniuk framed it, “but which version of the AI said it, and what changed since last Tuesday that might affect this call.”

According to the engineers, the headline-grabbing research in medical AI is happening elsewhere, backed by massive budgets. Their goal following the Odesa reality check is quieter, but perhaps more critical: building the unglamorous infrastructure of trust, brick by brick, so that when these models finally arrive in a clinic in Kyiv or Kansas, doctors won’t just stare blankly at the screen.